Pass Your Amazon AWS Certified Solutions Architect - Professional Certification Easy!

100% Real Amazon AWS Certified Solutions Architect - Professional Certification Exams Questions & Answers, Accurate & Verified By IT Experts

Instant Download, Free Fast Updates, 99.6% Pass Rate.

AWS Certified Solutions Architect - Professional SAP-C02 Exam:

AWS Certified Solutions Architect - Professional SAP-C02

Includes 511 Questions & Answers

$69.99

Download Free AWS Certified Solutions Architect - Professional Practice Test Questions VCE Files

| Exam | Title | Files |

|---|---|---|

Exam AWS Certified Solutions Architect - Professional SAP-C02 |

Title AWS Certified Solutions Architect - Professional SAP-C02 |

Files 1 |

Amazon AWS Certified Solutions Architect - Professional Certification Exam Dumps & Practice Test Questions

Prepare with top-notch Amazon AWS Certified Solutions Architect - Professional certification practice test questions and answers, vce exam dumps, study guide, video training course from ExamCollection. All Amazon AWS Certified Solutions Architect - Professional certification exam dumps & practice test questions and answers are uploaded by users who have passed the exam themselves and formatted them into vce file format.

New Domain 2 - Design for New Solutions

4. IDS/IPS in Cloud

Hey everyone and welcome back. In today's video, we will be discussing the IDs-IPS solution and how it can be implemented in cloud environments. So, let's understand the difference between a firewall and an ID/IPS with a simple analogy. Now, let's say that you have security here and this is the gate. So let's say this is the firewall. Now, a firewall will basically prevent other people who might be going through a different entry, let's say through some backside doors, etc., from going inside. But if people are coming through this official door, entry would be given. So this is very similar. Assume port 80 is open in a firewall, and traffic will pass through. However, if port 22 is closed, then the firewall will block the traffic from coming inside. However, the problem is that the firewall does not inspect the data that is going through the gate. So now, typically, if you go into airports, you have this type of scanner where your luggage is scanned. So what you have inside the luggage has been completely scanned. It might happen that you are carrying some weapons or certain unauthorised items that are not allowed to go inside. So this is the responsibility of IDs and IPS. So let's say you are carrying some kind of exploit inside your packets, or you are carrying some kind of thing like a SQL injection. So those kinds of malicious packets are something that I and IPS can block. Now, if you understand the TCPIP-based package fiber, typically it stays in the source address, the destination address, the source code, and the destination port. So these are the four major components based on which the firewall typically operates. However, you also have the TCP data. So an attacker can send a malicious exploitable packet back to the server, due to which it can be compromised. So IDs IPS typically looks into this TCP data. Now let me quickly show you this in Wireshark. So this is my Wireshark. So let's click on one of the packets. Now, if you look into the IP packet over here, the IP packet basically contains the source and the destination. And you have the TCP packet, which basically contains the source code and the destination port. So this is where the firewall typically operates, but you also have that data. Now, data is very important here, because if you do not scan the data or do not block the data if it is malicious, then the chances of your server being exploited are much higher. So it is very important that you also look into the data part. Now, in this type of packet, this is encrypted data. And typically, if you are deploying an Ideas IPS solution, you should also deploy your private key so that Ideas IPS can decrypt your encrypted data. Now, the deployment of Ideas IPS-based architecture in cloud environments is a little different. Like when you speak about AWS, you do not have access to the entire network, so you cannot create a span port or something similar. So you have your Ideas IPS appliance, and you can deploy the Ideas IPS architecture here. Let's say it is in a public subnet, and all the instances there have the agent installed for IDs and IPS. So the agent would communicate with the Ideas application, and the rules would be applied accordingly. All right? So remember this architecture because when you go ahead and design the Ideas IPS solution, you will have to approach it in this specific way. There are other ways, but they are not really recommended as they will bring your servers down. So this is the most ideal way. Now, when you look into the AWS marketplace for ideas for IPS, there are various solutions that are available. My preference. One is deep security. This is quite good. For example, I've been using this for three to four years in an environment with multiple compliances—more than five or six—ranging from PCI DSS to ISO and various country-level compliances. So Trend Micro Deep Security is a pretty interesting one, and it really works well. So anyway, this is something that I would suggest as a recommendation. So, a few things to remember before we conclude: This video, I guess, is basically about the intrusion detection system and IPS's intrusion prevention system. So the detection system will only detect and not block the packets in the intrusion prevention system. If it sees that there is malicious data in the packet, it will go ahead and block the packet. Now, in the AWS environment, you have to install the IDs IPS agents in your EC2 instance, which will communicate with the centralIdeas IPS appliance for the role configuration.

5. Understanding Principle of Least Privilage

Hey everyone and welcome back to the Knowledge Portal video series. Today we will be speaking about the principle of least privilege, which is one of the most important principles, specifically if you're working as a solutions architect or a security engineer. So let's go and understand what this principle means. So the principle of least privilege is, by definition, a practise of limiting access to a minimal level that will allow normal functioning for the entity that is requesting the access. So as far as the system administrators are concerned, users should only have access to the data hardware that they need to be able to perform the associated duties. So if a developer wants access to a specific log file, he should only have access to that log file, and he should not be able to do other things through which he can gain additional information that he is not authorised to get. And many times what really happens is that whenever a developer or someone requests access, the system administrator or even a solutions architect blindly grants access without any limitations, which leads to a lot of breaches within the organization. So let's take a sample use case where Alice is an intern who has joined your organisation as an intern system administrator. Now, since your infrastructure is hosted in AWS, you need to give access to Alice so she can view the AWS console, and the question is, what kind of access will you give? Now there are three options over here. The first option says to share the root credentials of your AWS. Definitely, this option is also something that will fulfil the use case. The second option is to create a new user for Alice with full permissions for everything. Now, if you compare the first option and the second option, you might find that the second option is much better because if you are using shared credentials because even if you have the same username as Alice and give her the root username, it is difficult to track what you or Alice are doing within your organization. So tracking individual users is very important. Now if we go into the third step over here, which states to create a new user named Alice with read-only access, then out of three the third one suffices. So you need to understand that whenever someone asks you for access, you should only give him the access that is required. Now I'll give you one of the examples because I used to work in a payments organisation where security was considered a top-notch priority. So anytime a developer would request access for a specific server, he would have to create a ticket with his manager's approval. So that was the first step. Second step: after managers are approved, we verify if the business justification is really there. If it is, then we used to ask him what command he wanted to run on the server and what log file he wanted to access. So he would say, I want to access the application log file, and I will need three commands, which are less, tail, and more." So we would only allow him three commands, and we would only allow him to access that specific log file. So it is indeed a lot. Anyway, so we are going more out of topic.Let me give you one of the similar examples so that we can see exactly how it would work. So, I have two terminals open over here; both belong to the test server. And what I will do is create a new user called the demo user. And once the user is created, let me login to the demo user from the second terminal. Now, since I have just run the user as a command, I have not really done anything explicitly to provide him some kind of administrative privilege. So let's look into what a normal user can do once he has access to a Linux system. So if I do, who am I? You say it's showing a demo user. So a normal user without any explicit privilege can run the net stat command so he can actually see what ports are listening in on the server. So I see that there is port 1, there is port 80, there is port 22, and there are a lot of other ports that are also listening. Other than that, a normal user can also list the packages that are installed on the server, along with the version numbers of each individual package. So if I just do rpm, hyper, QA, and grab NGINX, you can see that it is actually showing me that NGINX is installed, and it is giving me the exact package version. And this is something that you do not really want others to note because if there are any vulnerabilities, then it will make the life of a hacker quite easy. Then let's try some different things. Allow me to go to etc. engineering, as I do LS. And if I do a "nanoengineerings connect," you can see that a normal user can actually look into the configuration side of the web server as well. There are a lot of other things a normal user can also do. For example, he can go into the boot directory where the boot loader and your kernel files are stored. So this is, I would say, not a principle of least privilege being followed because even a normal user with basic access can actually do so many things within the server, and this is something that you do not really want. So what I have done is create a simple script. It is called Polp, which means "principle of least privilege." So let me run this specific script, and what it is doing is deescalating the privilege of the demo user. So let's wait a few seconds, and if you see that the minimum privilege has been applied, perfect. So now let me try to go into boot, and as you see, it is giving me permission denied. Let me try again, engineers, and if you see that permission is denied. So what we're doing is removing the user's privilege, which was created to only allow him to do the things he needs and not to allow him to go around and see what else is on the server. And this is what a principle of least privilege means. So going back to the PowerPoint presentation, we have one more use case where a software developer wants to access an application server to see the logs. Now that you've been a system administrator, you need to provide him access. Now the question is, how will you give him access? Now, this is a very generic use case. The options are to create a user with an add command and share the details. Now, this is something that we just demoed, and we can see what exactly a user can do if you just add him with the user add command. So this is something that you don't really need. The second step is to create a user with the user atcommand and add him to the list of sudoers. So a pseudos list is a file where a normal user can execute certain commands as an administrator user. And the third option here is to ask the developer what log file he wants to access, verify if his access is justified, and only allow him access to that specific log file and nothing else. And if you find that the third option is much more granular than the other two, this is the one you want to go with. I'll give you one of the real-world scenarios. This is specifically applied to the payments organisation where your audit happens. So every year when an auditor comes to your organization, he'll ask you to show the list of users who have access to, say, AWS or even the Linux server. And if you show him the list, he'll pick up some random user, and he'll ask you as a system administrator to provide him the justification on why exactly he has access to that server and, if he has access, what kind of commands he can run on that specific server. So it is very difficult, and if you have not really created or if you're not really designing the principle of least privilege, then you are really in trouble during the audit times.

6. IAM Policy Evaluation Logic

Hey everyone and welcome back to the Knowledge Portal video series. Today we will discuss the policy evaluation logic in relation to the AWS IAM. So this is a quite important topic, specifically when you are debugging the IAM policy-related issues issue.So let's go ahead and understand on howexactly the policies are evaluated in IAM. Now this is a quite nice diagram. All credit goes to the AWS documentation. So let's go ahead and understand the decision-making process. Now the first step is that any decision liketo access the S three bucket or maybe tostart an EC two instance any decision within AWSthe assumption is that the request will be denied. So it works based on thedeny by default based approach. So the first step is decision starts at deny. After the decision begins with "deny," all policies associated with that user or im role are evaluated. So this is the second step. So before we go into the third and 14th steps, let's go ahead and understand the first two steps because this is a quite important thing to understand. So let me go to the Alice user over here. Okay so there are three policies which are attached overhere and there is no policy related to S three. So, if I go to the S-3 console as Alice, you can see that access is denied. So what really happens is bydefault all the access are denied. That is the first step. The second step that happens is that whenever I open S3, what really happens is that the policies that are attached to the user are checked. Now since there is no policy which directly relates toallow of S three the permission is getting denied. So what we can do is I'll give an S three read only. Let me just type "S three." Okay so now we have giventhe S three readonly permission. So now, what is happening is the first step rememberfirst step is default deny second step the policies attachedto the entity in our case user is checked. Now we have one policy, so if you look at this JSON format, the policy states, "Get star list stars for the S three services," and the resource is an asterisk perfect.So, if you look into the effect, the effect is allowed, which means it will allow. As a result, this is an explicitly stated allow. So now if I just refresh the console page now yousee I am able to access the S three console. So this is how the policy evaluation logic works. As a result, the first step is to deny by default. Second, review all of the attached policies to see if any allow conditions are mentioned for that specific resource. Now the third step is code will look ifthere is any explicit deny in the policy. So this is the third step. If explicit denials are present, then the entire request is denied. That is the third step, and if no explicit denial mentioned or allowed is present, then the decision is allowed. So again, we will understand this part because I'm sure you are not very clear on this aspect, and we will understand this with the use case. Now we have an example scenario where there are four AWS resources (three buckets) that are available. So we've got five. So it should be five minutes. Now we want to give access to all the buckets in our set except one. Perfect. So quite an easy scenario. In order to do this, there are only two steps that are required. First is allow access to all the s three buckets. So this step will grant the user access to all three buckets, as well as all five over here. And the second step says to deny access to bucket five. So this is the bucket file. So what we are doing is allowing access to all the HP buckets and then explicitly denying access to a specific bucket. Now, this is quite important, you may ask, because if, in the future—let's assume next week there are ten more buckets added— So it is necessary, in order to solve this scenario, that the user have access to all the new ten and three buckets as well. So this is why we have to allow accessto all the s three buckets by default. So let's look into how this would be designed. So let's do one thing; I will delete the policy. Perfect. So just to demonstrate it for our demo purpose, I'll add one policy that says "three full access." Perfect. AWS now has three complete access points. That means the user will be able to do everything in AWS's three This makes sense. So we have essentially done the first step, which says to allow access to all the buckets in s three.Now, since the second condition says we want to give access to all the buckets except one, which would be the fifth one, So, if you quickly navigate to the S3 console, we will be preventing access to this bucket, which contains Kplashrod logs. So we will be preventing the user Alice from accessing this bucket. Other than this, the user Alice should be able to access any bucket. Perfect. So now we will create one more policy. I'll use the policy generator. I hope you are now aware of how policies and policy generators work. Select AWS's three. Now that the effect will be denied to the actions, I will select all the actions. and now comes the time for ARN. So again, we have already discussed how ARN is formulated. So I'll copy a s three's ARN. Let me paste it over here anldrip rename this to the bucket name, which is KP Labs logs. Let me quickly verify if it is the right one. Perfect, it is the right one, and I'll click on "add statement," I'll do a "next," and I'll say "deny" the ST bucket. So if you see over here, the effect is denied in action by the three stars, and in the source we have given the name "bucket." I'll click "validate the policy" and then click on "apply." So what is happening over here is that I have one policy that says give users full access to three buckets, and I have a second policy that says do not allow access to a bucket. Now, which one of them would work? So let's find it out. So I will refresh the S-3 console, and if I try to open this bucket, you see it is giving me access denied. Now, if I try to open the dev bucket, I will be able to see the files over here. Now let's do one thing. Let me edit the policy that we had created. Let me edit the policy, and now in the resource I'll put an asterisk, I'll validate it, and I'll click on Save. So what we have created is that the first policy we have created allows full access to S-3, and the second policy that we have created states that we do not allow any access to S three.Now the question is, which among them would work? So let's find that out. Let me just refresh the page, and now you see that access is being denied. This means the deny policy will take precedence over the allow policy. So always remember, if you have denied and allowed for the same resource, the denial will take precedence. This is very important to understand, and this is what exactly the documentation states: the first thing it will check is if there is a denial. If it is a deny, then it will deny it by default. If there is no denial and if there is approval, then the final decision is allowed. And this is what these points really mean. So I hope you got the basics of the decision-making process. Again, you have to understand the difference between explicit denial and denial by default. Now, a request will be denied by default if there is no allow policy present for the resource. So if there is no policy attached to auer and a user is trying to open an AS console, that would be denied by default. And explicit deny means the denial condition that we have explicitly mentioned over here; you see in the effect we have mentioned "deny." As a result, this is known as the explicit denial and the default. If there are no policies attached to the user and the user is trying to do something, then the first evaluation logic works, which is that the decision starts with deny. And this is the decision-making process.

7. AWS Security Token Service

Hey everyone, and welcome to the Knowledge Put video series. So, today, I made the decision to get up around 554 a.m. I actually work at 330 in the morning, and the first lecture that we are recording for the day is at 555. Just so you don't hear our neighbors' cooking, it appears that our neighbours have also been awoken quite early. So before they start to cook and you hear noise, let's go ahead and complete our lectures. So today's topic is AWS STS. Now, this is a pretty interesting topic as far as the IAM is concerned. So let's go ahead and revise a few things before we touch the topic of Sts. Now, we have already been discussing how an Im role worked in the previous lecture. So let's revise. We have an EC-2 instance over here and we have an S-3 bucket. As a result, consider this a list resource. Now, EC2 instance 2 wants to connect to a specific AWS resource, which is the S3 bucket. So there are two ways in which we can do it. The first way is to set up the AWS access and secret keys with the help of the AWS configure command. And the second is that we attach my role to the EC-2 instance, and then we will be able to access the bucket depending upon the policy. So we will focus on the second option, which is the IAM role, where we create an Im role and attach two policies to that Im role, which are S three read-only and EC two read-only. Once we create the policies for the Im role, we attach the Im role to the instance, and then the instance will be able to view the bucket. So let's do one thing. Let's try this out first, and then we will go ahead in much more depth. So I have my EC 2 instance running, which is Skep Labstwo B, and there is an Im rule called Kpops. So if I just go to Im, I'll click on roles, and I'll select KP Labs over here. So there is one policy that is attached, which is Amazon's three-read-only access. Now, since this Im role is connected to the EC2 instance, the EC2 instance will be able to inherit the policies that are part of the Im rule. So let me quickly log into the server, and if you remember the EC2 two instance metadata, So let's start with AWS, three LS. So I am able to view the instance. This is because of the policy that is attached to the Im rule, which was written in S three times only. Now if you remember about the EC-2 instance metadata, I'm doing a curl on the security credentials related to the Kplab IAM role. It gives me three things. It gives me the access key, it gives me the secret key, and it gives me the session token. Note that these three things have an expiration. So after the expiry, all of these will be removed, or they will be automatically deleted, or they will become invalid. Now, you can actually use these three things on your laptop. It is not really necessary that it needs to be connected with a rule. You can use these three things on your desktop computer, and you will be able to do AWS TLS as well. We will be looking into how that would really work. But one important thing to remember is this: let's go back to our PPT. One important thing to remember is that the access key, secret key, and token that we received in the EC2 instance via the EC2 instance metadata are not given by the IA role. It is actually given by a service called as Sts.And that STS service is responsible for providing you those credentials. So the IAM role has a trust relationship with the STS service. And through the STS service, you receive those credentials. So let me show you. So if you look into the Im role, there is a Trust Relationship option over here. And if you click on "Edittrust Relationship," this is the policy. So, in terms of principle, you see EC-2 Amazon AWS.com in the policy. So this is the service, and this service will be able to access the STS's assumed role. Now, the STS service will be able to generate those credentials. And if we really remove this, then we will not be able to generate those access secret keys. and that AWS's three LS commands will not work. Okay? So let me just cancel over here. So I hope you now get that it is the STS that generates the credentials that you see over here. And I do not really have to do anything directly with the generation-related aspect. You can just access the credentials throughinstance metadata, which is generated by STDs. Perfect. So, going back to the third slide So the temporary security credentials are the ones that we just looked at. We are the instance metadata. So just to revise AWS, STS allows us to create temporary security credentials. Now, there are a few important things to remember as far as the temporary security credentials are concerned: that they are short-term and that they expire after a certain duration. This is very important to remember. So they keep on rotating, and the older credit ENS will be invalid after the newer ones are generated and the expiration is over. Now, since they have limited lifetimes, the key rotation is no longer explicitly needed. Now, the key rotation was one of the challenging parts. Specifically, when we generate a permanent access and secret key, we have to rotate it at a specific interval of time. Let's assume 90 days. so that even if they are leaked to the public, they will be invalid after a certain period of time. So this is where the temporary security credentials come into the picture. And this is one of the screenshots related to how we can generate temporary security credentials.

8. AWS STS - Migration EC2 Credentials - Part 2

Hey, everyone, and welcome back. Now, in the last lecture, we had generated the credentials, or we had received the credentials through the instance metadata. We discussed that we can actually use these credentials outside of EC2 on our laptop as well. So let's go ahead and understand how it really works. So, what I'll do is copy these three important parameters, which are the accesskey, secret key, and the session token. Let me just copy this and have my virtual machine running over here. I'll just log in to the virtual machine. Okay, perfect. So let me do one thing. Let me open up the G edit and I'll paste it over over here. PeSo the first thing we'll do is go to the AWS credentials file, and I already have my credentials configured for the Alice user that we had done earlier. So I'll comment those out, and just to verify, if I just do AWS S three LS, it will not ee LS, it So let's go ahead and configure these credentials, which were generated through the STS viaIM role in an EC2 instance. So let's do that in our local credentials file. So let's name this EC-two role. I'll put AWS access underscore key, underscore ID is equal to AWS CK underscore access underscore key, and I'll copy this out within the access key ID within the secret key. Let me copy this. And there is one more field, if you will see, which is the token. So I have created a format, the format, which is used to declare the session token. I've written it down here so that I don't really make any mistakes. so I'll copy the token and I'll paste it over he token and So now we have copied those three things. If I perform AWS S3 LS now and insert a profile of an EC2 role, you will see that I can obtain the same permission that a role has. There are a few important things to remember. And let's assume a user has stolen these credentials. He has a login to the AWS server, and he has stolen these credentials. Now, for the good part, since these credentials are generated by AWS, these can be expired. So this will expire after a certain period of time. So even if someone has stolen these credentials, you don't really have to worry too much because they will expire eventually. And the expiration time is also mentioned when you generate the instance metadata. So, this is it for this lecture. I hope you got the basic understanding of how the tokens I generated work and how we can use them in different instances as well. So, this is it for this lecture. I hope you liked it, and I look forward to seeing you in the next lecture.

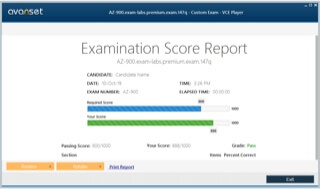

ExamCollection provides the complete prep materials in vce files format which include Amazon AWS Certified Solutions Architect - Professional certification exam dumps, practice test questions and answers, video training course and study guide which help the exam candidates to pass the exams quickly. Fast updates to Amazon AWS Certified Solutions Architect - Professional certification exam dumps, practice test questions and accurate answers vce verified by industry experts are taken from the latest pool of questions.

Amazon AWS Certified Solutions Architect - Professional Video Courses

Top Amazon Certification Exams

- AWS Certified Solutions Architect - Associate SAA-C03

- AWS Certified AI Practitioner AIF-C01

- AWS Certified Cloud Practitioner CLF-C02

- AWS Certified Solutions Architect - Professional SAP-C02

- AWS Certified Security - Specialty SCS-C02

- AWS Certified Data Engineer - Associate DEA-C01

- AWS Certified Machine Learning Engineer - Associate MLA-C01

- AWS Certified Developer - Associate DVA-C02

- AWS Certified Advanced Networking - Specialty ANS-C01

- AWS Certified Machine Learning - Specialty

- AWS Certified DevOps Engineer - Professional DOP-C02

- AWS Certified SysOps Administrator - Associate

- AWS-SysOps

Site Search:

Add Comment

Feel Free to Post Your Comments About EamCollection VCE Files which Include Amazon AWS Certified Solutions Architect - Professional Certification Exam Dumps, Practice Test Questions & Answers.